25. From RNN to LSTM

Before we take a close look at the Long Short-Term Memory (LSTM) cell, let's take a look at the following video:

23 From RNNs To LSTMs V4 Final

Long Short-Term Memory Cells, (LSTM) give a solution to the vanishing gradient problem, by helping us apply networks that have temporal dependencies. They were proposed in 1997 by Sepp Hochreiter and Jürgen Schmidhuber

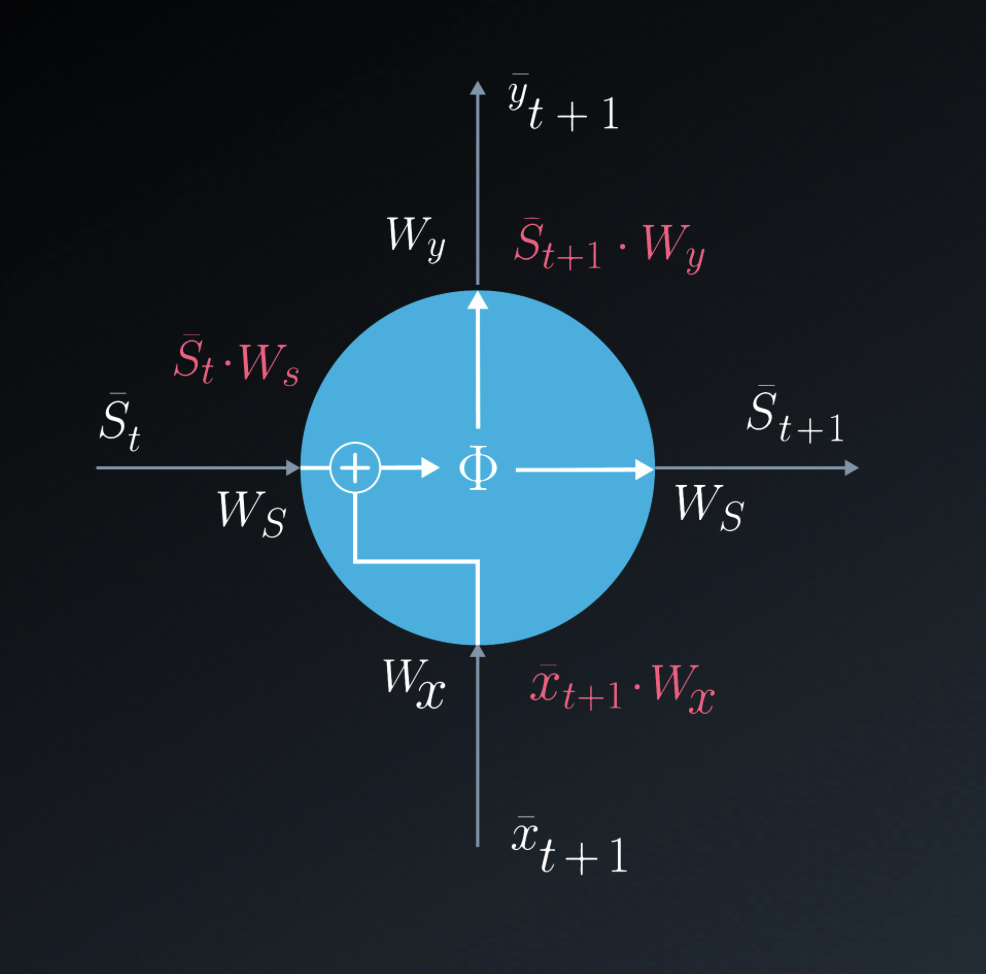

If we take a close look at the RNN neuron, we can see that we have simple linear combinations (with or without the use of an activation function). We can also see that we have a single addition.

Zooming in on the neuron, we can graphically see this in the following configuration:

Closeup Of The RNN Neuron

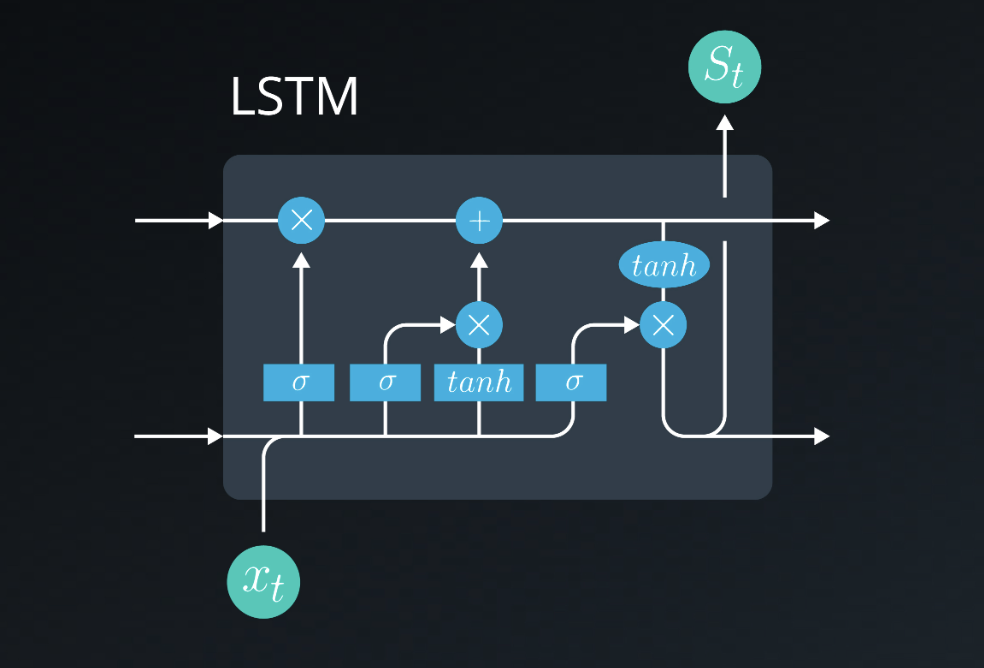

The LSTM cell is a bit more complicated. If we zoom in on the cell, we can see that the mathematical configuration is the following:

Closeup Of the LSTM Cell

The LSTM cell allows a recurrent system to learn over many time steps without the fear of losing information due to the vanishing gradient problem. It is fully differentiable, therefore gives us the option of easily using backpropagation when updating the weights.

In our next set of videos Luis will help you understand LSTMs further.